Ai Breakdown Or Takeaways From The 78 Page Llama 2 Paper Deepgram

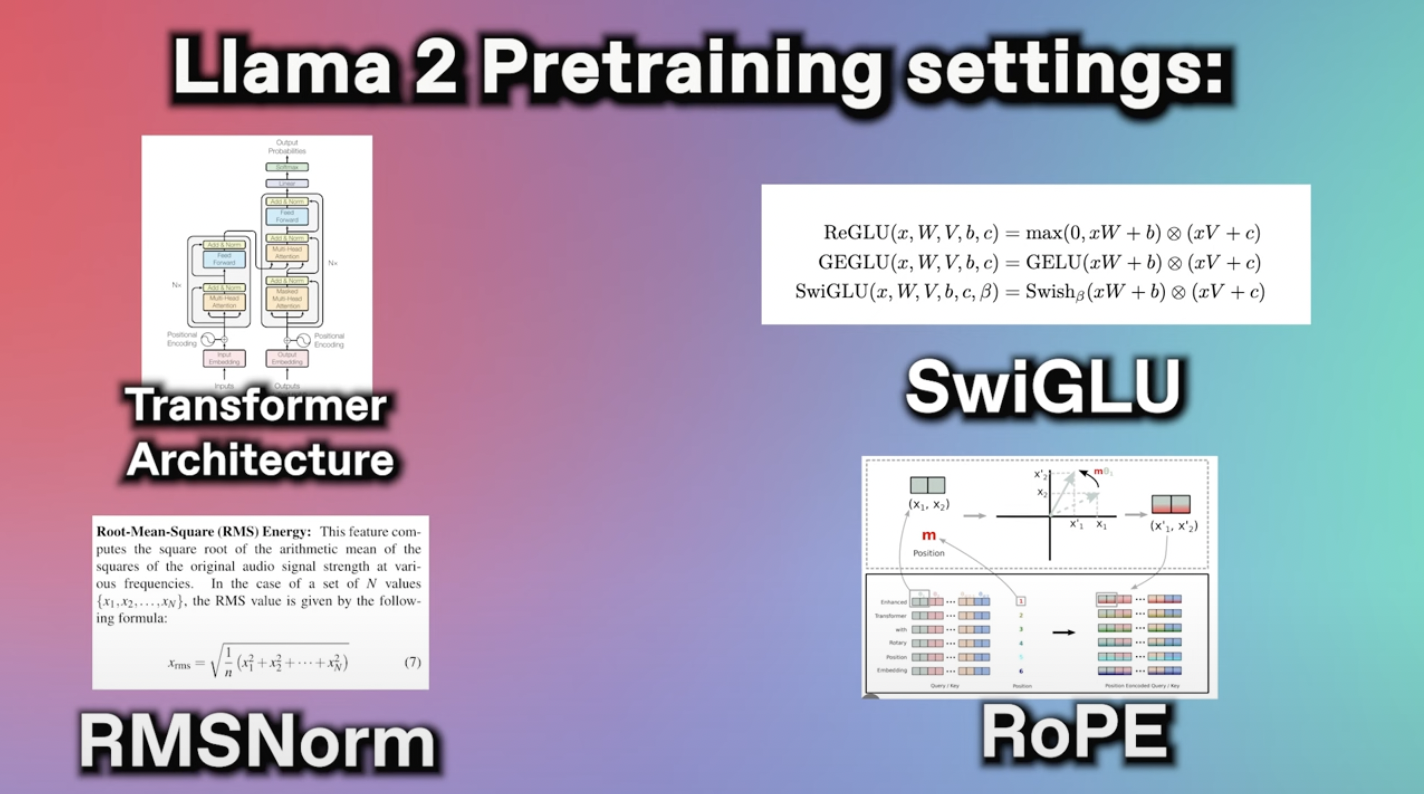

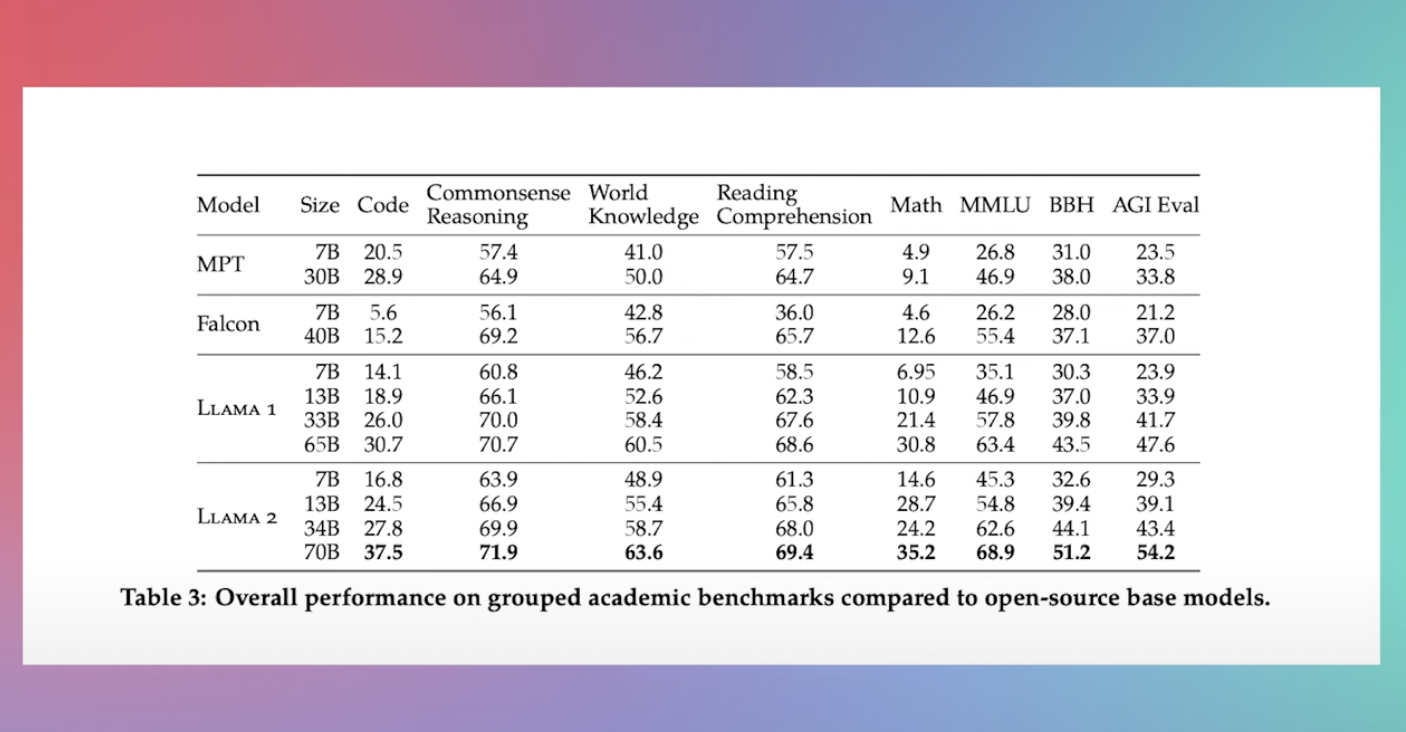

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. Llama-2 much like other AI models is built on a classic Transformer Architecture To make the 2000000000000 tokens and internal weights easier to handle Meta. Feel free to follow along with the video for the full context Llama 2 Explained - Arxiv Dives w Oxenai. The Llama 2 research paper details several advantages the newer generation of AI models offers over the original LLaMa models. LLAMA 2 Full Paper Explained hu-po 318K subscribers Subscribe 2 Share 4 waiting Scheduled for Jul 19 2023 llm ai Like..

Opt for a machine with a high-end GPU like NVIDIAs latest RTX 3090 or RTX 4090..

Ai Breakdown Or Takeaways From The 78 Page Llama 2 Paper Deepgram

Medium balanced quality - prefer using Q4_K_M Large very low quality loss - recommended. Deploy Use in Transformers main Llama-2-70B-Chat-GGUF llama-2-70b-chatQ5_K_Mgguf TheBloke Initial GGUF model commit models made with llamacpp commit e36ecdc 9f0061c 4. 24 days ago knob-0u812 M3 Max 16 core 128 40 core GPU running llama-2-70b-chatQ5_K_Mgguf Generation Fresh install of TheBlokeLlama-2-70B-Chat-GGUF. Download Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters Below you can find and download LLama 2. Llama 2 offers a range of pre-trained and fine-tuned language models from 7B to a whopping 70B parameters with 40 more training data and an incredible 4k token context..

LLaMA Model Minimum VRAM Requirement Recommended GPU Examples. Hardware requirements for Llama 2 425 Closed g1sbi opened this issue on Jul 19 2023 21 comments commented llama-2-13b-chatggmlv3q4_0bin offloaded 4343. The main differences between Llama 2 and Llama are Larger context length 4096 instead of 2048 tokens trained on larger dataset. How much RAM is needed for llama-2 70b 32k context Question Help Hello Id like to know if 48 56 64 or 92 gb is needed for a cpu setup Supposedly with exllama 48gb is all youd need for 16k. Its likely that you can fine-tune the Llama 2-13B model using LoRA or QLoRA fine-tuning with a single consumer GPU with 24GB of memory and using QLoRA requires even less GPU memory and..

Comments